As operators push toward autonomous networks, one truth is becoming clear: the hidden barrier isn’t technology—it’s data quality. Automation is only as good as the data it runs on. Poor data quality doesn’t just cause inefficiency—it can break automation altogether. And with Generative AI now being embedded into operational workflows, the stakes are even higher. If the data feeding AI models is inconsistent, stale or incomplete, the output will be unreliable at best—and damaging at worst.

Nowhere is this challenge bigger than in telecom. Network data is messy and fragmented, spanning physical and logical connectivity, service hierarchies, customer records and configuration details. It lives across network element managers, inventories, service management platforms and customer systems, each tied to different vendors, domains and technologies and often split between legacy and modern architectures.

To make automation and AI work, operators need a way to bring all these disparate data sources together, understand them in context and ensure they are accurate.

Traditional DQM tools have relied on manual checks, static rules and siloed governance. They may find some errors but fail to scale across the complexity of telecom environments. What’s missing is a semantic foundation that not only detects issues but also reasons over them and drives accountability. This is where the Semantic Digital Twin comes in.

Why a Semantic Digital Twin Matters for DQM

Unlike conventional systems, a semantic digital twin models data with meaning and context. Each network element, service or customer isn’t just a record—it’s represented in relation to everything else, governed by a shared ontology.

This matters because in telecom, the same data often exists across multiple systems—each with its own version of the truth. A semantic digital twin can reason across these sources, identify conflicts, infer the most accurate state and surface a trusted single source of truth—a “golden record.”

This unlocks three powerful capabilities for DQM:

- Infer the best data sets: By reconciling discrepancies across sources, it determines which version of the data is most reliable.

- Reconcile data back into sources: It doesn’t stop at spotting errors—it feeds corrections into operational systems, keeping everything aligned.

- Link accountability to humans: Issues are not just anomalies; they’re tied to responsible owners who can drive resolution.

The result is a shift from passive reporting to active, systemic data improvement—a critical step toward autonomous operations.

A Two-Layer Approach to DQM

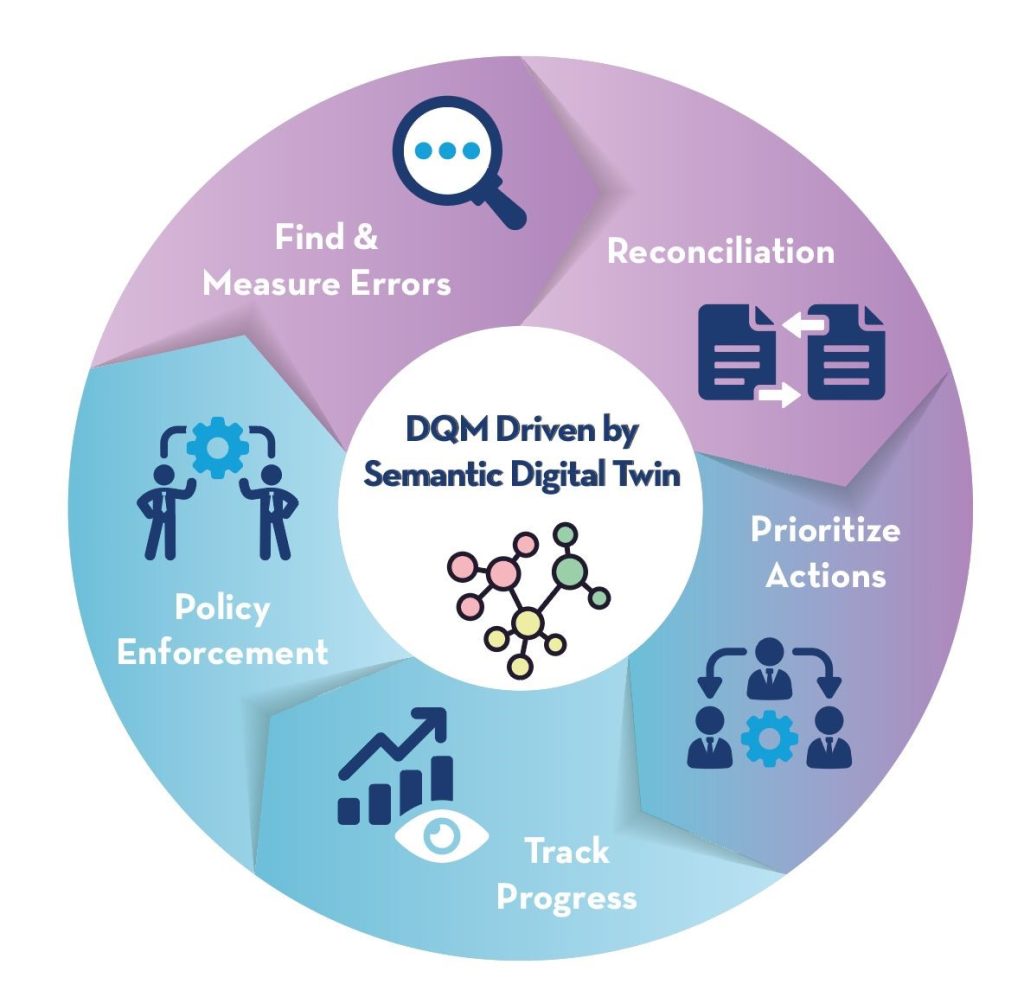

With a semantic digital twin, data quality management unfolds in two coordinated layers:

1. Foundation for DQM – Detection & Intelligence Layer

This is the “eyes and brain” of the process.

- Find & Measure Errors: Continuously identifies anomalies, design mismatches or missing/stale data.

- Reconciliation: Infers the most accurate state and recommends alignment across systems.

- Business-Driven Prioritization: Ranks remediation actions based on compliance, cost or customer impact.

2. Enforces DQM Policy – Human-in-the-Loop Coordination Layer

This is the coordination and enforcement layer of the process.

- Action Assignment & Accountability: Maps issues to the right teams or owners.

- Gap Tracking & Progress: Monitors how fixes progress and surfaces recurring issues.

- Policy Enforcement: Embeds into governance frameworks with escalation when needed.

From Layers to a Continuous Process

The intelligence and coordination layers do not operate in isolation—they work together in a closed-loop cycle. Errors are detected, reconciled, assigned, tracked and enforced as part of a continuous process.

This ensures that data quality management is not a one-off clean-up exercise but an ongoing engine of improvement. Issues are fixed at the source, progress is measured and policies are reinforced, driving lasting trust in the data that powers automation and AI.

By visualizing DQM as a cycle, the role of the semantic digital twin becomes clear: it’s the contextual brain at the center, ensuring every step is guided by intelligence, accountability and business relevance.

Closing the Loop

As the industry accelerates toward autonomous networks and GenAI-driven operations, data quality becomes the make-or-break factor. AI agents reasoning over poor data will produce poor decisions. Automation built on inconsistent inventories will fail to execute reliably.

A Semantic Digital Twin ensures that doesn’t happen. By combining detection, reasoning and accountability, it delivers a trusted foundation where AI and automation can operate safely and effectively. It doesn’t just highlight what’s broken—it helps fix it at the source and prevents it from coming back.

In short:if autonomousnetworks are the destination and GenAI is the brain, then a Semantic Digital Twin for DQM is the immune system—constantly monitoring, detecting and correcting unhealthy data so the whole automation body stays resilient, trusted and ready to perform.